Welcome to Logpoint Community

Connect, share insights, ask questions, and discuss all things about Logpoint products with fellow users.

-

New in KB: Ethernet interface name changes on reboot

The predictable naming scheme on interface is disabled due to the line below in grub configuration

GRUB_CMDLINE_LINUX="net.ifnames=0 biosdevname=0"

as a result the system with more than one network interface cards can change for example eth0 can become eth5 or eth3 can become eth2 and so on across reboots.

Reference for getting back legacy scheme here .

Note: Following activity requires sudo access/run as root user.

Inside /etc/udev/rules.d/70-persistent-net.rules, change the entry like this with proper mac address:-

Include all physical network interfaces:- for example(assuming the system as 6 interfaces). Here eth0 with mac address 28:05:Ca:d8:fc:1a has active link.SUBSYSTEM=="net", ACTION=="add", DRIVERS=="?*", ATTR{address}=="28:05:Ca:d8:fc:1a", ATTR{dev_id}=="0x0", ATTR{type}=="1", KERNEL=="eth*", NAME="eth0"SUBSYSTEM=="net", ACTION=="add", DRIVERS=="?*", ATTR{address}=="31:e1:71:6b:68:ad", ATTR{dev_id}=="0x0", ATTR{type}=="1", KERNEL=="eth*", NAME="ether1"SUBSYSTEM=="net", ACTION=="add", DRIVERS=="?*", ATTR{address}=="31:a1:71:6b:68:ae", ATTR{dev_id}=="0x0", ATTR{type}=="1", KERNEL=="eth*", NAME="ether2"SUBSYSTEM=="net", ACTION=="add", DRIVERS=="?*", ATTR{address}=="30:b1:71:6b:68:af", ATTR{dev_id}=="0x0", ATTR{type}=="1", KERNEL=="eth*", NAME="ether3"SUBSYSTEM=="net", ACTION=="add", DRIVERS=="?*", ATTR{address}=="28:cf:37:0d:0e:c0", ATTR{dev_id}=="0x0", ATTR{type}=="1", KERNEL=="eth*", NAME="ether4"SUBSYSTEM=="net", ACTION=="add", DRIVERS=="?*", ATTR{address}=="18:df:37:0d:0e:38", ATTR{dev_id}=="0x0", ATTR{type}=="1", KERNEL=="eth*", NAME="ether5"Create a symlink:-ln -s /dev/null /etc/systemd/network/99-default.link

udevadm triggerudevadm control --reloadupdate-initramfs -u

Reboot the system to see if the interface name is set as expected.

In case of LACP bonding simply use etherN name for all interfaces, reboot and run ethbonding command to configure bond interfaces.

To verify bonding is working or not you can ssh to the machine & run the command below

cat /proc/net/bonding/bondN

unplug one of the cable and see if ssh connection is still working.

Another approach (for non-bonded setup )beside above would be only keeping one interface and detaching excess interfaces from the system.

-

New in KB: Optimization of Process ti() based queries

Process ti is used in Logpoint to use the search time enrichment functionality from the threat intelligence database. This can be done by using

| process ti(field_name)in the query.Example:

source_address=* | process ti(source_address)

This query will enrich all the logs which match the source_address field with the ip_address column present in the threat_intelligence table. This source_address to ip_address (and other) mappings can be configured from the threat intelligence plugin's configuration settings.

The search query above takes a large amount of time to complete if there are a large number of logs present within the search time frame. We can optimize queries by filtering logs before piping to process ti() part in the query.

Example:

norm_id=* source_address=* | process ti(destination_address)

This query can be optimized by using another query:

norm_id=* source_address=* destination_address=* | process ti(destination_address)

This query will select only the logs that have destination_address in them, as it would make no sense to match logs that do not have destination_address, using destination_address with threat intelligence database.

Although this does some optimization, for a large number of search results this query also takes time and hence further optimization can be done to complete this query in a small amount of time.

For this optimization, first, we need to store the corresponding column from threat_intelligence table which needs to be matched with the actual query result into a dynamic list. For example:- If we need to map IP field like source_address or destination_address, we can run the following query to populate a dynamic List (e.g MAN_TI List) with ip_address field of threat intelligence database.

Table "threat_intelligence" | chart count() by ip_address | process toList("MAN_TI", ip_address)Similarly, for another field, we can do

Table "threat_intelligence" | chart count() by field_name | process toList("Table_name", field_name)Once we have populated data in the dynamic list we don't need to populate this until the age_limit set on Dynamic list(MAN_TI)

After the table is populated we can change the querynorm_id=* source_address=* destination_address=* | process ti(destination_address)

to

norm_id=* destination_address in MAN_TI | process ti(destination_address)

In generic form, an optimized query would be

Filter_query field_name in Dynamic_list | process ti(map_field_name)

This query will complete much faster than the original query.

Note: If there is a large number of entries in the created dynamic_list created above then we will see some error in UI reporting max_boolean_clause count reached. This would mean we need to tune a parameter in Index-Searcher / Merger / Premerger services to increase the number of boolean clauses allowed.

Parameter_name: MaxClauseCount

We need to increase this configurable parameter based on the number of rows present in the dynamic list.

This can be configured in

lp_services_config.jsonlocated at/opt/immune/storageas follows:{"merger":{"MaxClauseCount":<value>},"premerger":{"MaxClauseCount":<value>},"index_searcher":{"MaxClauseCount":<value>},}After this change, we need to regenerate the config file with the following command:

/opt/immune/bin/lido /opt/immune/installed/config-updater/apps/config-updater/regenerateall.sh

Try increasing the value of MaxBooleanCount continuously until the UI search does not throw an error and the search completes smoothly. A good rule of thumb would be to start with double the number of entries in the dynamic list.

This optimization can have some repercussions as well:

In the case of search_head-data_node configuration if bandwidth between these two instances is low, then we can get issues in the search pipeline as the query that needs to be transferred from search_head to data_node would increase in size after this optimization. If the bandwidth is not very low, then this optimization will not have such a significant impact on the system and it would increase search performance significantly.

-

New Threat Intelligence v6.1.0 - out now

For those of you that do not subscribe to TI updates I can inform that version 6.1.0 is now available. See information and Knowledge Base Package here: https://servicedesk.logpoint.com/hc/en-us/articles/115003790549

Regards,

Brian Hansen, LogPoint

-

Passing parameters to Actions

I am taking the first steps with the SOAR capability in LP7.

I am trying to use the Nexpose API to enrich data of a device, and collect info like OS, number of vulnerabilities etc using the nexpose-search-assets Action, as this can filter on an IP address and doesn’t need the Nexpose device ID.

The filters are in the request body in JSON format.

The Logpoint action for this has the following in the request body (out of the box there are a couple of additional optional fields which I have removed as they are not needed for this).

{"filters": [{"field": "", "operator": "", "value": "",}, "match": "all"}The field and operator can be hard coded for this action as they won’t change.

How do I configure the action so that when an IP address is passed into the action in a playbook, it gets inserted into the request body as the value?

-

Todays Scaling and Sizing Webinar not happening?

For today there was a webinar for scaling and sizing scheduled, but it didn’t happen:

Was there any reason for this?

-

LogPoint 7 Python error running script

Hi

Has there been some changes in the implementation of Python in LogPoint 7.

I have a script 2 scripts that ran without errors in LogPoint 6 but now I get:

--

Traceback (most recent call last):

File "device_export_lp6.py", line 17, in <module>

from pylib import mongo

ModuleNotFoundError: No module named 'pylib'

--

Regards

Hans

-

Brochure

Dear Sirs

Where I can Find a Brochure for SIEM for to send to our Customers in South America ?

-

Cisco Ironport eMail Security Appliance integration with UEAB. Why did it not work?

Hi,

I´m struggeling with the integration of the Cisco Ironport eMail Security Appliance as UEBA source.

The LogPoint documenation - Data Sources For UEBA — UEBA Guide latest documentation (logpoint.com) - indicates the ESA is supported.

The corresponding UEBA matching query is - device_category=Email* sAMAccountName=* receiver=* datasize=* | fields,log_ts,sender,receiver,userPrincipalName,sAMAccountName,datasize,subject,status,file,file_count

The ESA never sends a combination of receiver and datasize. The ESA only logs a combination auf sender and datazize. The ESA´s sender & receiver logs are linked only via the MID “message_identifier”

Has anyone seen or did this integration with Cisco´s ESA and UEBA? Is it running in the correct way?

Thanks.

BR

Johann

-

Will LP 6 still receive updates ?

Hello,

i have some minor questions regarding LP “policies” regarding security vulnerabilities:

Will LogPoint 6 still receive patches to fix security vulnerabilities ? E.g. LP 7.0.1 fixes the polkit vulnerability. As polkit was discovery AFTER the latest patch for LP 6 (6.12.02), there is a good chance LP6 is affected by it too, but there is no patch available and i didn’t find any informationen that LP 6.12.02 is NOT affected by this vulnerability.

I am currently not keen to upgrade my LP installations from 6.12.2 to LP 7, but there have been some vulnerabilities for Linux recently (Log4Shell, polkit, dirty pipe, now zlib) with a good chance of LP being affected by them. If LP6 will not receive patches anymore, i would have to update (fast).

Generally speaking, is there any documentation how long the different LP versions are supported ?

Also, is there a webseite, newsletter etc to get get a quick overview or (even better) automatic notification when a new LP patches \ software updates are released ?

Right now i log into the service desk, browse to the product site and check manually, which is rather time consuming.

Andre

-

Unable to receive the logs from 0365?

Using logpoint to fetch logs from Microsoft Office 365 but unable to receive the logs of emails (like:- email delivery etc ) except the mail delivery fail logs.

Able to fetch the logs like:

-Mail delivery failure

Not able to reveive the logs like:

-Mail delivered

Any suggestion? Any Solution?

-

Old Community closing down today.

Dear All,

We would like to inform you that the old LogPoint Community on https://servicedesk.logpoint.com/hc/en-us/community/posts is closing down today / 25.03.2022 and all community activity will be directed to this community.

-

Export large amount of raw logs

Hi @all

I need to export a large amount of raw logs - about 450 GB.

Is it possible for me to export this amount in one go via the Export Raw Logs functionality or do I need to export the raw logs sequentially?

Thx a lot!

-

Masterclass - Danmark recording

Tak til alle der deltog i vores seneste Masterclass for Norden. Glem ikke at gå ind og registrere dig til vores næste Masterclass d. 26 April, du kan læse mere her: https://go.logpoint.com/Nordic_Masterclass_2022. Hvis du ikke fik chancen til at se det live kan du her se optaglesen samt præsentationen.

-

How to create health alerts in Logpoint for monitoring

Hi Team,

Could you please help us creating health alert like CPU 95% and memory usage is more than 80% in Logpoint.

Thanks &Regards

Satya

-

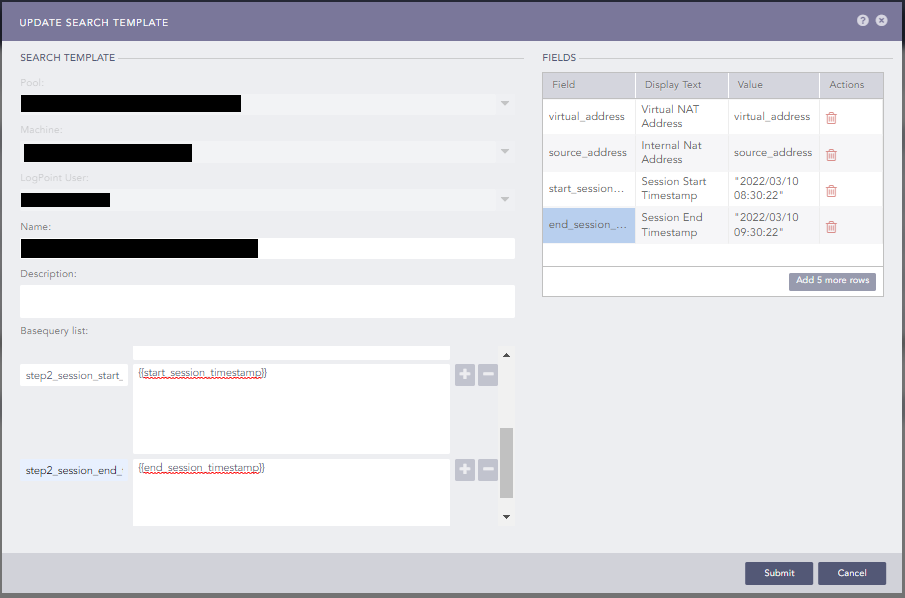

Using Timestamp in Search Template Variable?

Is it somehow possible to use a timestamp in a search template variable?

For example I want to compare log_ts to be between two timestamps.

Therefore I added “fields” in the template config and added them to base queries. But it always either complains about the quotes (“) or the slashes inside the timestamp string (e.g. "2022/03/10 08:30:22").

See the example below:

If I now use the base queries in widget, it throws the said errors.

Detailed configuration to reproduce as follows:

Fields:

Field Display Text Value start_session_timestamp Session Start Timestamp 2022/03/10 08:30:22 end_session_timestamp Session End Timestamp 2022/03/10 09:30:22 Basequeries:

step2_between_timestamps log_ts >= "{{ start_session_timestamp }}" log_ts <= "{{ end_session_timestamp }}"Widget:

Name Test Query {{step2_between_timestamps}}Timerange 1 Day Is this a bug or am I doing it wrong?

-

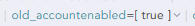

Query returning no logs when field is [ true ]?

Hey folks,

First time posting here - I’ve got a bit of a strange issue when querying for a specific type of log.

We have our Azure AD logging to Logpoint and I wanted to search for any account updates where the previous value of the ‘AccountEnabled’ field was ‘true’.

"ModifiedProperties": [

{

"Name": "AccountEnabled",

"NewValue": "[\r\n false\r\n]",

"OldValue": "[\r\n true\r\n]"

},

]As you can see from my screenshot below, there is this field in the normalized log which outputs the previous value, but when querying under the same timeframe after clicking on that specific field, the query shows 0 logs.

Is this something I’m doing wrong, is this a bug with how the search query is interpreting it or is it a normalisation issue?

Field that I’m interested in searching for (with the matching value)

Clicking the field and searching with this query shows 0 logs. I’ve also tried re-formatting the query, but no dice. We are using the built-in Azure AD normalizer, with most of the default fields. Any ideas how I might resolve this/work around this would be great.

-

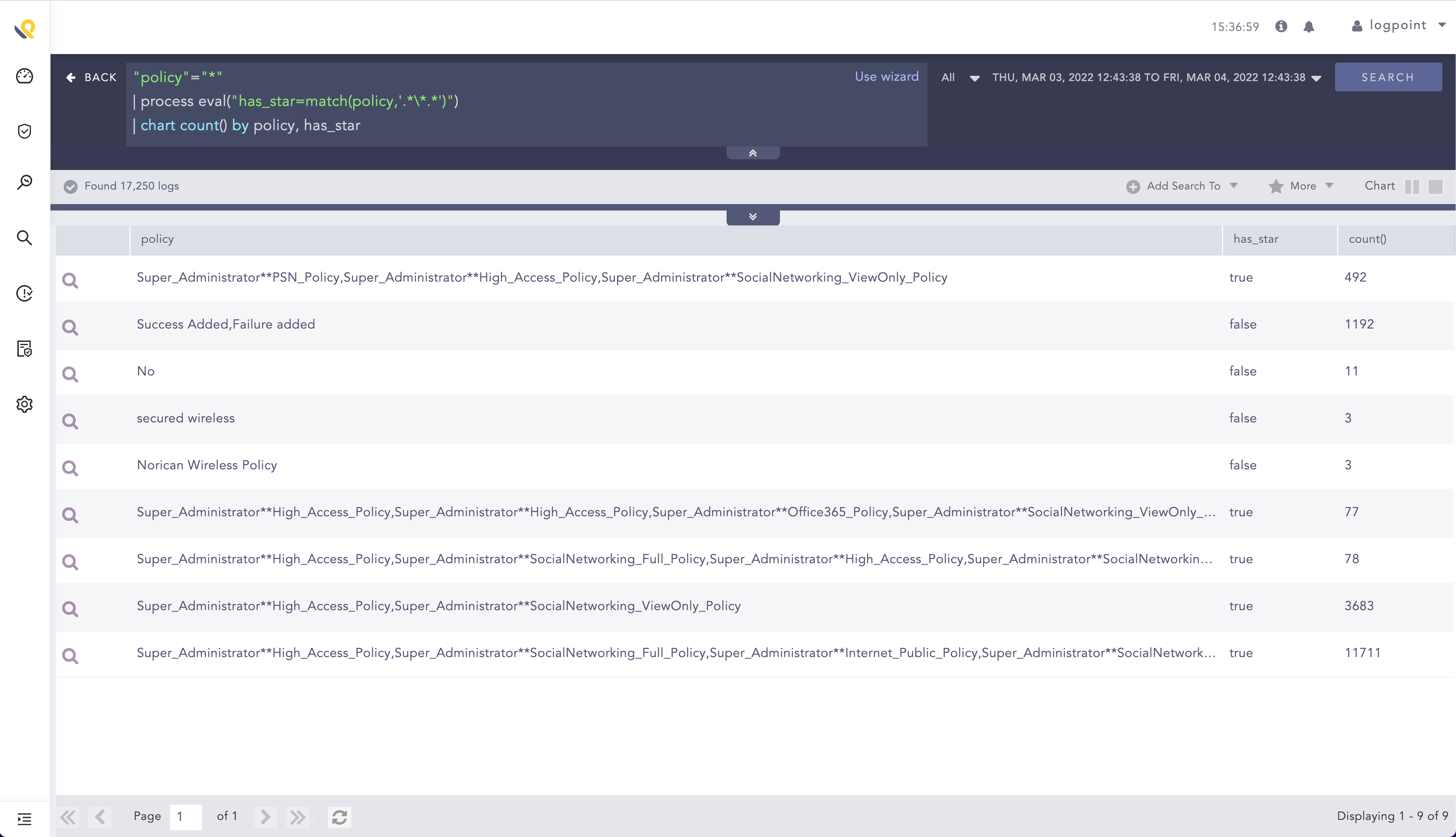

Searching for special characters in fields like the wildcard star "*"

When searching for special characters in field values in Logpoint just pasting them in a regular

Key = value

expression, can often result in searches not working as intended from the users perspective as the Logpoint search language will interpret the character differently from the intention.

For instance searching for fields with a star “*” character results in getting all results that has a value in that specific key, as Logpoint uses the star “*” as a wildcard character, which basically means “anything”.key = *will result in all logs with a field called keyInstead of using they kay value pairs to search we can use the builtin command match to find any occurrences of the value that we are looking for. In this example we will search for the star “*” frequently referred to as wildcard.

We have some logs that have a field called policy in which we would like to find all occurrences of the character star “*” . To do this we first ensure that the policy field exists in the logs that we search by adding the following to our search:

policy = *

Next we want to use the command called match, which is a command that can be used with the process command eval. If we read on the docs portal (Plugins → Evaluation Process Plugin → Conditional and Comparison Functions) we can see that the match command takes a field and a regex and output a true or false to a field:

| process eval("identifier=match(X, regex)")In above example:

- identifier is the field to which we will return the boolean value true or false. This can be any field name that is not currently in use e.g. identifier or has_star

- match is the command

- X is the field that we want to find the match in

- regex is where we copy our regex surrounded by single quotes ‘’

So with this in mind we just need to create our regex, which can be done with your favourite regex checker. copy a potential value and write the regex. In this case we wrote the following regex which basically says match any character including whitespace until matching a star character *, then match any character including whitespace after. This regex match the full field value if there is 1 or more stars “*” in it.

.*\*.*Now we just need to add it to our search and do a quick chart to structure our results a bit. The search will look like the following

"policy"="*"

| process eval("has_star=match(policy,'.*\*.*')")

| chart count() by policy, has_starThe search results can be seen for below:

From here a simple filter command can be used to filter on results with the star in the policy field by adding

| filter has_star=trueThe search can also be used to match other things that are not special characters, e.g. finding logs with a field that starts with A-E or 0-5.

-

Customer open hours sessions - limited spots available! :)

We still have a few spots available for our exclusive customer open hour sessions with LogPoint experts from our engineering, customer success, support, and global services teams.

You might have questions like:

- How do I activate SOAR on top of my SIEM V7.0?

- How do I create a Trigger?

- Do I need to pay to activate my SOAR?

- Or something completely different. We are here to help you.

The Open Hour sessions are:

Upgrading to LogPoint 7 is free. Visit the LogPoint Help Center to download LogPoint 7.

We look forward to answering your questions and supporting your experiences with LogPoint SIEM+SOAR. -

We are excited to announce our newest global service: Playbook Design Service.

Our converged SIEM+SOAR performs automated investigation and response to cybersecurity incidents using playbooks. Playbook Design Service is an additional service assisting organizations with refining and optimizing your manual incident response processes into documented workflows and automated playbooks tailored for your organization. Our service encompasses a complete playbook lifecycle, from understanding your specific needs to the creation, development, and testing of the playbook. Having our Global Services experts by your side enables utilizing your SIEM to its fullest extent, reducing your workload, and increasing your ROI on security controls.

For more information, download our Playbook Design Service brochure: https://go.logpoint.com/playbook-design-service?_ga=2.39629923.1196326192.1645625385-1446914226.1645171249&_gac=1.261194623.1642752963.CjwKCAiA0KmPBhBqEiwAJqKK412rigizVIxknwM7T0qJ3YeUrzEpvCi5Q4a5OEID4NJS455Nz2QDixoCaZUQAvD_BwE

-

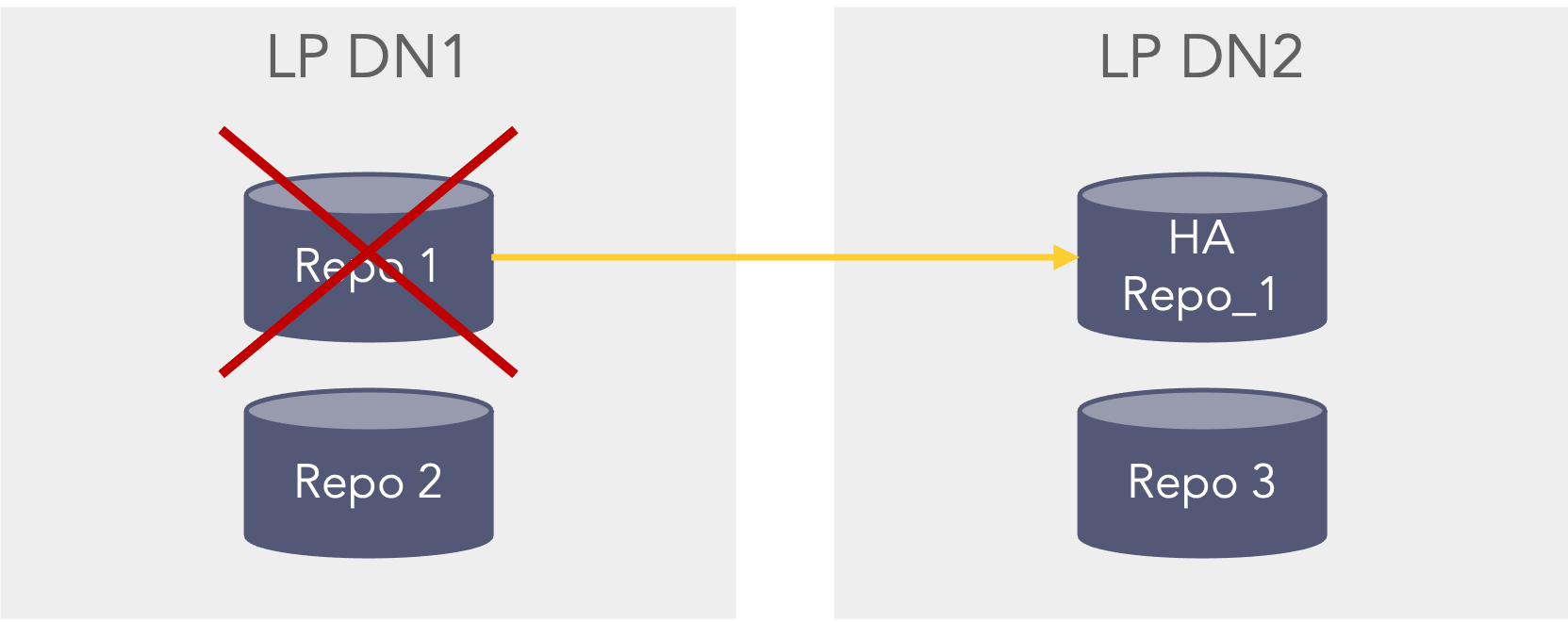

High Availability Repos usage

While deploying LogPoint with High Availability repos I did a few tests scenarios on how HA behaves that I thought would be relevant to share.

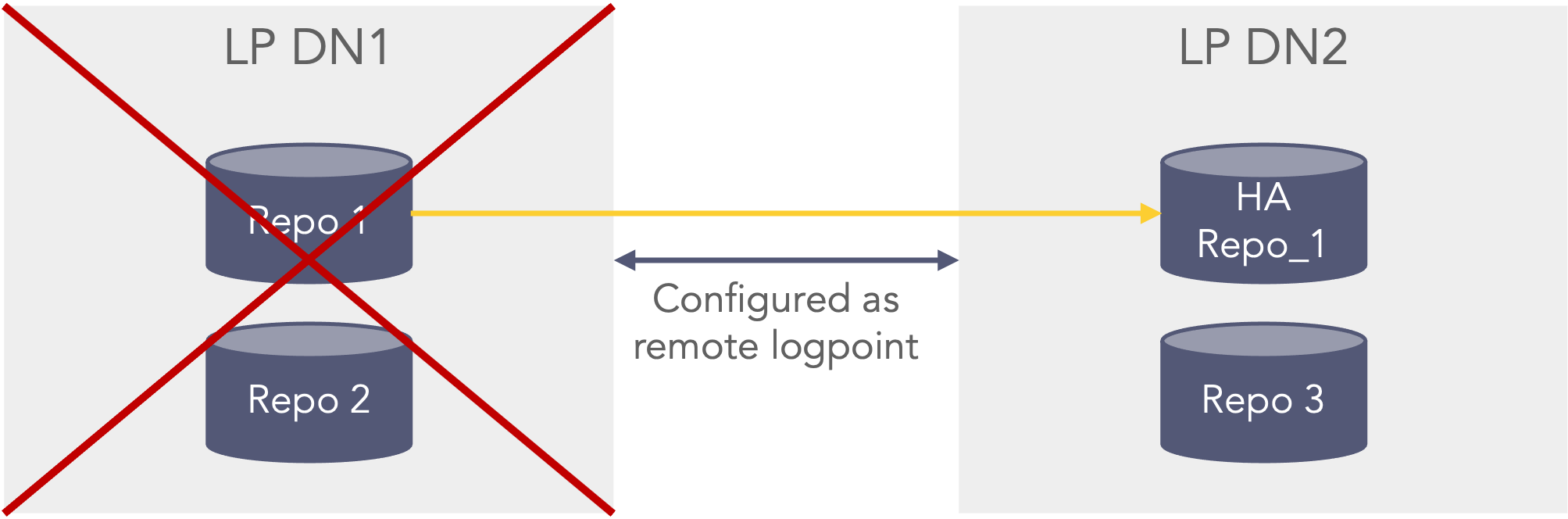

Repositories can be configured as high availability repositories which means that the data is replicated to another instance. This means that logs will be searchable in a couple of scenarios:First scenario, if the repo fails on the primary datanode (LP DN1) it will be able to search in the HA Repo_1 on the secondary datanode (LP DN2). This could for instance be that the disk was faulty or removed or the permissions on the path were set incorrect. This scenario can be seen in the picture below where the Repo 1 which is configured with HA, on the primary datanode (LP DN1) is unavailable, but still searchable as the secondary data node (LP DN2) still has the data in the HA Repo 1 repo. In this scenario the Repo 2 and Repo 3 are still searchable.

First HA scenario where one HA repo fails In the second scenario If the primary data node (LP DN1) is shutdown or unavailable the data can be searched from the secondary datanode (LP DN2). However, this can only happen if the primary datanode (LP DN1) is configured as a remote LogPoint on the secondary datanode (LP DN2), so that when selecting repo’s in the search bar the repo’s from the primary datanode (LP DN1) can be seen and selected when searching (This also applies before the primary datanode is down/unavailable) from the secondary data node (LP DN2). The premerger will then know that it can search on the HA repo’s stored on the secondary data node (LP DN2) even though the repo’s are down on the primary data node (LP DN1) as it cannot be reached. In this case the Repo 1 can be searched via the HA Repo 1 and the Repo 3 can also be searched, Repo 2 is not searchable.

Second HA scenario where the full primary server is down or unavailable -

Exclusive customer open hour sessions with LogPoint experts - Register now!

Are you using LogPoint 7 but have questions about SIEM or SOAR?

For a short period we are offering exclusive customer open hour sessions with LogPoint experts from our engineering, customer success, support, and global services teams.

You might have questions like:

- How do I activate SOAR on top of my SIEM V7.0?

- How do I create a Trigger?

- Do I need to pay to activate my SOAR?

- Or something completely different. We are here to help you.

The Open Hour sessions are:

Upgrading to LogPoint 7 is free. Visit the LogPoint Help Center to download LogPoint 7.

We look forward to answering your questions and supporting your experiences with LogPoint SIEM+SOAR. -

Using Alert to populate a Dynamic List without the Alert firing

I wanted to share on the community how you can use an Alert rule to populate a dynamic list.

- Create the Dynamic list you want to populate

- Age limit on the Dynamic list is how long the data from the Alert will stay in the dynamic list before the values are removed

- Create the Alert that can populate the dynamic list

- Search Interval: Defines how often the the search is running on the LogPoint. Every search interval it will update the dynamic list if it finds new values or prolong existing values in the dynamic list

- You can set the condition on the Alert to be something like Trigger: condition: Greater than "99999" for it to never fire to the incidents view.

- However the Alert still needs to find results in the | process toList() part of the search query to populate the Dynamic List.

This is a way to use an alert to automate the process of populating a dynamic list without the alert firing and cluttering the incidents view.

/Gustav -

After update to 7.0 TimeChart does not work anymore

Be aware if your are going to upgrade to 7.0 there are a bug in TimeChart function, and will not work.

Answer from support:

Hi Kai,

We are extremely sorry for the inconvience caused by it, fixes has been applied in upcoming patch for 7.0.1So if you need it, maybe you should wait to 7.0.1 are out.

Regards Kai

-

How to correlate more than 2 lines of logs?

We have a Cisco IronPort which is analyzing emails.

Each email analysis process generates multiple lines of logs that can be related to each other by a unique id (the normalized field “message_id”).

However, i am now lacking ideas how i can correlate more than two log lines e.g. with a join.My goal is to first search for logs where the DKIM verification failed. after that I would like to see ALL log lines that contain the same message_id as the first "DKIM" log line. The number of log lines can vary.

Here are some of my approaches, which unfortunately do not give the desired result:

[message="*DKIMVeri*"] as s1 join [device_ip=10.0.0.25] as s2 on s1.message_id = s2.message_idThis only retruns two log lines, not all matching s1.message_id = s2.message_id. Also a “right join” doesn’t work, even when the documentation indicates it .

[4 message="*DKIMVeri*" having same message_id]“having same” needs to specify the exact amount of logs, while this information is unknown. Furthermore, a result is returned, where only the normalized fields behind the "having same" clause are further usable, not those of the found events. Also the filter “message” here breaks the whole concept.

Do you have any ideas how to solve the issue?

-

How to restrict incident content to one event?

Hi folks,

When one sets up an alert, all the rows matching the search are sent to the alert. I have a use case where it is counterproductive to be able to track SLA and customers impacted. Basically, we’re concentrating all EDR alerts from many platform in one repo and want to trigger an incident by event.

I fear the limit parameter will hide other events. And playing with both limit and time range seems not deterministic.

Does anyone know how I could achieve 1 incident by row returned in the alert search?

Thanks

-

RegEx in fulltext search?

I want to search an absolute windows path name to NOT start with A, B, C drive letters.

I tried queries like this:

path in ['[D-Z]:\\*']but this doesnt work. Any ideas?

-

Microsoft Dynamic NAV application

Hey,

We have a requirement for analysing Microsoft Dynamics 365 logs. My understanding is that Dynamic NAV is now Dynamic 365 Business Central.

In this case, the log source will be coming from Microsoft Dynamics 365 Sales Enterprise. Does anyone know or have any experience of using this LogPoint application, and will it parse the logs properly?

Many thanks,

-

nested joins in queries

Hello,

I am trying to automate getting some statistics I have to report to the executive relating to the number of resolved and unresolved alerts we are dealing with as a security team.

I’m starting with the the alerts out of Office 365 as I thought that might be easier. The end goal being to aggregate the alerts from a number of different sources and either provide a regular report or dashboard for the executive. However I am starting small.Getting the number of resolved alerts is fairly straightforward

norm_id="Office365" label="Alert" status="Resolved" host="SecurityComplianceCenter" alert_name=* | chart count()however getting the unresolved alerts is not quite as easy. My initial test involved negating the status field in the query above. Whist this gave a result, it was not very accurate.

The problem appears to be that the status can be in one of 3 states, “Active”, “Investigating” or “Resolved”, so my first attempt was counting multiple log entries for the same alert.

After some more experimentation I have come up with

[norm_id="Office365" label="Alert" -status="Resolved" host="SecurityComplianceCenter" alert_name=*] as search1

left join

[norm_id="Office365" label="Alert" status="Resolved" host="SecurityComplianceCenter" alert_name=* ] as search2

on search1.alert_id = search2.alert_id | count()This sort of works and the result is more accurate, but still very different to what Office 365 shows.

I think I need to check that an entry with an Active or an Investigating state only counts once for the same alert_id before it is checked against whether there is a corresponding resolved entry for that alert_id

I am not sure how to achieve this, whether it would need a nested join, or whether nested joins are even possible.

Any hints, or better ways of achieving this would be greatly appreciated.

Thanks

Jon

-

McAfee ePO - Some logs are not normalized

Some logs coming from MCAfee ePo server are not being normalized. At first glance it seems that MCAfee introduced a new log type regarding PrintNightmare which LP does not recognize. I asked the customer and he indeeed uses McAfee to prevent users from installing new print drivers.

We are using LP 6.12.02 and McAfee application 5.0.1. The normalization policies include

- McAfeeEPOXMLCompiledNormalizer

- LP_McAfee EPO XML

- LP_McAfee EPO Antivirus

- LPÜ_McAfee EPO Antivirus DB

- LP_McAfee EPO Antivirus DB Generic

Just added McAfeeVirusScanNormalizer. Maybe this will do the trick

Example log (i replaced some information with REMOVED BY ME)

<29>1 2022-01-24T06:52:07.0Z ASBSRV-EPO EPOEvents - EventFwd [agentInfo@3401 tenantId="1" bpsId="1" tenantGUID="{00000000-0000-0000-0000-000000000000}" tenantNodePath="1\2"] ???<?xml version="1.0" encoding="UTF-8"?><EPOevent><MachineInfo><MachineName>REMOVED BY ME</MachineName><AgentGUID>{a231b576-9e3a-11e9-2dbc-901b0e8e1ab2}</AgentGUID><IPAddress>REMOVED BY ME</IPAddress><OSName>Windows 10 Workstation</OSName><UserName>SYSTEM</UserName><TimeZoneBias>-60</TimeZoneBias><RawMACAddress>901b0e8e1ab2</RawMACAddress></MachineInfo><SoftwareInfo ProductName="McAfee Endpoint Security" ProductVersion="10.7.0.2522" ProductFamily="TVD"><CommonFields><Analyzer>ENDP_AM_1070</Analyzer><AnalyzerName>McAfee Endpoint Security</AnalyzerName><AnalyzerVersion>10.7.0.2522</AnalyzerVersion><AnalyzerHostName>GPC2015</AnalyzerHostName><AnalyzerDetectionMethod>Exploit Prevention</AnalyzerDetectionMethod></CommonFields><Event><EventID>18060</EventID><Severity>3</Severity><GMTTime>2022-01-24T06:48:33</GMTTime><CommonFields><ThreatCategory>hip.file</ThreatCategory><ThreatEventID>18060</ThreatEventID><ThreatName>PrintNightmare</ThreatName><ThreatType>IDS_THREAT_TYPE_VALUE_BOP</ThreatType><DetectedUTC>2022-01-24T06:48:33</DetectedUTC><ThreatActionTaken>blocked</ThreatActionTaken><ThreatHandled>True</ThreatHandled><SourceUserName>NT-AUTORITÄT\SYSTEM</SourceUserName><SourceProcessName>spoolsv.exe</SourceProcessName><TargetHostName>REMOVED BY ME</TargetHostName><TargetUserName>SYSTEM</TargetUserName><TargetFileName>C:\Windows\system32\spool\DRIVERS\x64\3\New\KOAK6J_G.DLL</TargetFileName><ThreatSeverity>2</ThreatSeverity></CommonFields><CustomFields target="EPExtendedEventMT"><BladeName>IDS_BLADE_NAME_SPB</BladeName><AnalyzerContentVersion>10.7.0.2522</AnalyzerContentVersion><AnalyzerRuleID>20000</AnalyzerRuleID><AnalyzerRuleName>PrintNightmare</AnalyzerRuleName><SourceProcessHash>b0d40c889924315e75409145f1baf034</SourceProcessHash><SourceProcessSigned>True</SourceProcessSigned><SourceProcessSigner>C=US, S=WASHINGTON, L=REDMOND, O=MICROSOFT CORPORATION, CN=MICROSOFT WINDOWS</SourceProcessSigner><SourceProcessTrusted>True</SourceProcessTrusted><SourceFilePath>C:\Windows\System32</SourceFilePath><SourceFileSize>765952</SourceFileSize><SourceModifyTime>2020-07-08 08:54:39</SourceModifyTime><SourceAccessTime>2021-03-05 10:58:36</SourceAccessTime><SourceCreateTime>2021-03-05 10:58:36</SourceCreateTime><SourceDescription>C:\Windows\System32\spoolsv.exe</SourceDescription><SourceProcessID>2852</SourceProcessID><TargetName>KOAK6J_G.DLL</TargetName><TargetPath>C:\Windows\system32\spool\DRIVERS\x64\3\New</TargetPath><TargetDriveType>IDS_EXP_DT_FIXED</TargetDriveType><TargetSigned>False</TargetSigned><TargetTrusted>False</TargetTrusted><AttackVectorType>4</AttackVectorType><DurationBeforeDetection>28068597</DurationBeforeDetection><NaturalLangDescription>IDS_NATURAL_LANG_DESC_DETECTION_APSP_2|TargetPath=C:\Windows\system32\spool\DRIVERS\x64\3\New|TargetName=KOAK6J_G.DLL|AnalyzerRuleName=PrintNightmare|SourceFilePath=C:\Windows\System32|SourceProcessName=spoolsv.exe|SourceUserName=NT-AUTORITÄT\SYSTEM</NaturalLangDescription><AccessRequested>IDS_AAC_REQ_CREATE</AccessRequested></CustomFields></Event></SoftwareInfo></EPOevent>

-

LP Alert rule correct ? (LP_Windows Failed Login Attempt using an Expired Account")

Hello,

i am currently taking a look at the alert rules shipped with LogPoint trying to figure out which of these are applicable to our environment, and sometimes find something i think (keep in mind, i am neither an expert reagrding LogPoint nor InfoSec) is not correct. I do not know whether LogPoint has any bug tracker i can post\ask for clarification.

E.g.

Alert rule - LP_Windows Failed Login Attempt using an Expired Account (LP 6.12.2)

“This alert is triggered whenever user attempts to login using expired account.”

The search query isnorm_id=WinServer* label=User label=Login label=Fail sub_status_code="0xC0000071" -target_user=*$ -user=*$ -user IN EXCLUDED_USERS | rename user as target_user, domain as target_domain, reason as failure_reason Asa far as i understand the Windows documentation ( 4625(F) An account failed to log on. (Windows 10) - Windows security | Microsoft Docs ), the substatus 0xC000071 means the login was attempted with an expired password , not with an expired account , which would be 0xC0000193.

So shouldn’t the search query use the substatus 0xC000193, or am i missing something ? (I do not see the big impact a login attempt with an expired password has, while i would like to be alerted when an expired account tries to login).

Another question:

I would like to know what “label=User label=Login label=Fail” (or any other shipped label) actually decodes to. However, i can not find the search package for the Windows labels to take a look how these search labels are “decoded”.

User groups in Zendesk Community allow members to connect, share insights, ask questions, and collaborate within specific interest areas.